Are you eager to dive into the world of generative AI and enhance your understanding of model optimization? The course "Quantization for GenAI Models" on Udemy offers an engaging pathway to mastering the art of quantization—a technique that reduces the model size and speeds up inference times without sacrificing performance. Whether you’re just starting or looking to deepen your knowledge, this course is designed to help you navigate the complexities of quantization in generative AI.

What you’ll learn

In this course, students will acquire a solid foundation in the essential skills and technologies related to quantization for generative AI models. Key takeaways include:

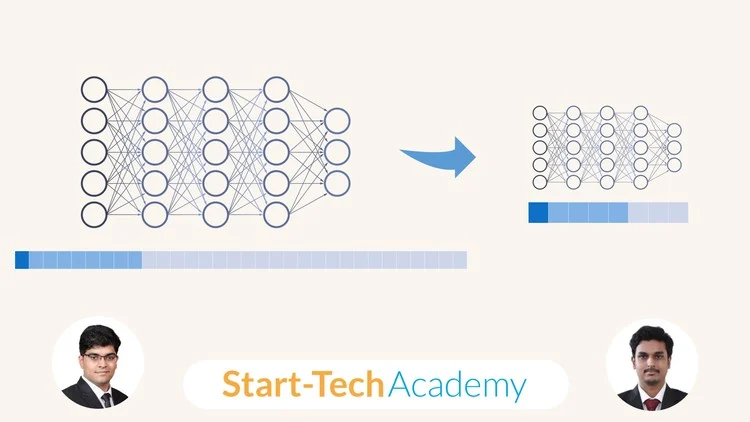

- Understanding Quantization Techniques: Learn the various types of quantization, such as weight quantization, activation quantization, and post-training quantization strategies.

- Model Optimization: Get hands-on experience optimizing generative AI models like neural networks for better performance in real-world applications.

- Framework Familiarity: Gain proficiency in using popular machine learning frameworks such as TensorFlow and PyTorch to implement quantization techniques.

- Evaluation Metrics and Trade-offs: Understand how to evaluate the performance of quantized models and the trade-offs involved in model accuracy versus computational efficiency.

- Practical Applications: Explore case studies showcasing the application of quantization in various generative AI scenarios.

By the end of the course, learners will have the confidence and skills needed to apply quantization techniques to their generative AI projects effectively.

Requirements and course approach

This course is beginner-friendly, but it does assume some foundational knowledge of machine learning concepts and Python programming. Before enrolling, it’s recommended that you have:

- A basic understanding of neural networks and how they function.

- Familiarity with key machine learning terminology.

- Basic programming skills in Python.

The course is structured in a way that balances theoretical concepts with practical implementations. Through a combination of video lectures, hands-on coding exercises, quizzes, and real-world examples, learners can engage with the material effectively. The step-by-step approach ensures that even those with minimal experience can follow along and grasp the intricate details of quantization.

Who this course is for

"Quantization for GenAI Models" is tailored for a diverse audience:

- Beginners in machine learning who want to develop a deeper understanding of generative AI and optimization techniques.

- Intermediate learners looking to enhance their skill set by integrating quantization into their model development process.

- Data scientists and AI practitioners who are interested in deploying efficient and optimized models in production environments.

- Developers working on AI applications seeking to improve model performance without incurring significant computational costs.

Regardless of your background, if you’re passionate about generative AI and eager to learn how to make models more efficient, this course is for you.

Outcomes and final thoughts

Upon completion of this course, students can expect to walk away with a robust knowledge of quantization and its applications in generative AI models. You’ll be equipped with practical skills that will enable you to optimize models for better performance in real-world tasks, reducing latency, and improving model deployment efficiency.

In summary, "Quantization for GenAI Models" is an invaluable resource for anyone interested in the intersection of machine learning and model optimization. The engaging format, comprehensive content, and practical applications make it an excellent choice for both beginners and more experienced learners looking to stay ahead in the rapidly evolving AI landscape. Dive in today and discover how to bring your generative AI models to new heights!